The End Of The Commercial Real Estate Recession Is Finally Here

Since 2022, commercial real estate (CRE) investors have been slogging through a brutal downturn. Mortgage rates spiked as inflation ripped higher, cap rates expanded, and asset values fell across the board. The rally cry became simple: “Survive until 2025.”

Now that we’re in the back half of 2025, it seems like the worst is finally over. The commercial real estate recession looks to be ending and opportunity is knocking again.

I’m confident the next three years in CRE will be better than the last. And if I’m wrong, I’ll simply lose money or make less than expected. That’s the price we pay as investors in risk assets.

A Rough Few Years for Commercial Real Estate

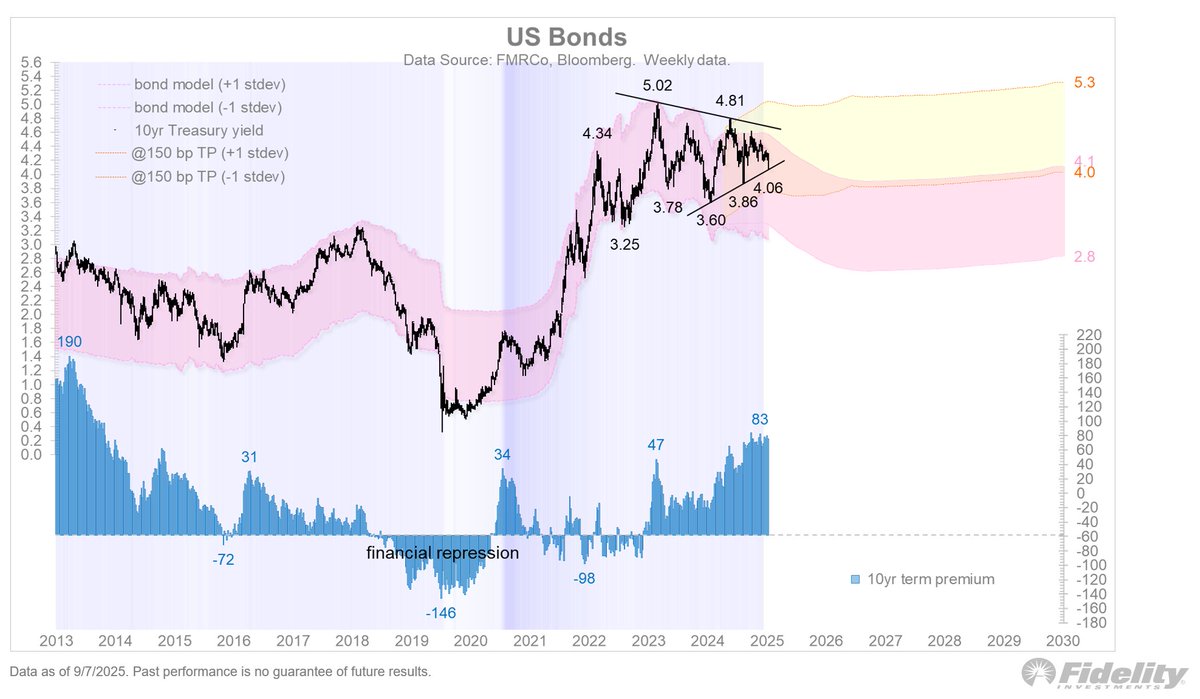

In 2022, when the Fed embarked on its most aggressive rate-hiking cycle in decades, CRE was one of the first casualties. Property values are incredibly sensitive to borrowing costs because most deals are financed. As the 10-year Treasury yield climbed from ~1.5% pre-pandemic (low of 0.6%) to ~5% at the 2023 peak, cap rates had nowhere to go but up.

Meanwhile, demand for office space cratered as hybrid and remote work stuck around. Apartment developers faced rising construction costs and slower rent growth. Industrial, once the darling of CRE, cooled as supply chains froze and then normalized.

With financing costs up and NOI growth flatlining, CRE investors had to hunker down. Headlines about defaults, extensions, and “extend and pretend” loans dominated the space.

Fast-forward to today, and the landscape looks very different. Here’s why I believe we’re at the end of the CRE downturn:

1. Inflation Has Normalized

Inflation has cooled from a scorching ~9% in mid-2022 to under 3% today. Lower inflation gives the Fed cover to ease policy and investors more confidence in underwriting long-term deals. Price stability is oxygen for commercial real estate, and it’s finally back.

2. The 10-Year Yield Is Down

The 10-year Treasury, which drives most mortgage rates, has fallen from ~5% at its peak to ~4% today. That 100 bps drop is meaningful for leveraged investors. A 1% lower borrowing cost can translate into 10%+ higher property values using common cap rate math.

3. The Fed Has Pivoted

After more than nine months of holding steady, the Fed is cutting again. While the Fed doesn’t directly control long-term mortgage rates, cuts on the short end generally filter through. The psychological shift is also important: investors now believe the tightening cycle is truly behind us.

4. Distress Is Peaking

We’ve already seen the forced sellers, the loan extensions, and the markdowns. Many of the weak hands have been flushed out. Distress sales, once a sign of pain, are starting to attract opportunistic capital. Historically, that transition marks the bottom of a real estate cycle.

5. Capital Is Returning

After two years of sitting on the sidelines, capital is coming back. Institutional investors are underweight real estate relative to their long-term targets. Family offices, private equity, and platforms like Fundrise are actively raising and deploying money into CRE again. Liquidity creates price stability.

Where the Opportunities Are In CRE

Not all CRE is created equal. While office may be impaired for years, other property types look compelling:

- Multifamily: Rent growth slowed but didn’t collapse. With little-to-no supply of new construction since 2022, there will likely be undersupply over the next three years, and upward rent pressures.

- Industrial: Warehousing and logistics remain long-term winners, even if growth cooled from the pandemic frenzy.

- Retail: The “retail apocalypse” was overstated. Well-located grocery-anchored centers are performing, and experiential retail has staying power.

- Specialty: Data centers, senior housing, and medical office continue to attract niche capital. With the AI boom, data centers is likely to see the most amount of CRE investment capital.

As a capital allocator, I’m drawn to relative value. Stocks trade at ~23X forward earnings today, while many CRE assets are still priced as if rates are permanently at 2023 levels. That’s a disconnect worth paying attention to.

Don’t Confuse Commercial Real Estate With Your Home

One important distinction: commercial real estate is not the same as buying your primary residence. CRE investors are hyper-focused on yields, cap rates, and financing. Homebuyers, on the other hand, are more focused on lifestyle and utility.

For example, I bought a new home not to maximize financial returns, but because I wanted more land and enclosed outdoor space for my kids while they’re still young. The ROI on peace of mind and childhood memories is immeasurable.

Commercial real estate, by contrast, is about numbers. It’s about cash flow, leverage, and exit multiples. Yes, emotions creep in, but the market is far more ruthless.

Risks Still Remain In CRE

Let’s be clear: calling the end of a recession doesn’t mean blue skies forever. Risks remain:

- Office glut: Many CBD office towers are functionally obsolete and may never recover.

- Debt maturities: There’s a wall of loans still coming due in 2026–2027, which could test the market again.

- Policy risk: Tax changes, zoning laws, or another unexpected inflation flare-up could derail progress.

- Global uncertainty: Geopolitical tensions and slowing growth abroad could spill into CRE demand.

But cycles don’t end with all risks gone. They end when the balance of risks and rewards shifts in favor of investors willing to look ahead.

Why I’m Optimistic About CRE

Roughly 40% of my net worth is in real estate, with ~10% of that in commercial properties. So I’ve felt this downturn personally.

But when I zoom out, I see echoes of past cycles:

- Panic selling followed by opportunity buying.

- Rates peaking and starting to decline.

- Institutions moving from defense back to offense.

I recently recorded a podcast with Ben Miller, the CEO of Fundrise, who’s optimistic about CRE over the next three years. His perspective, combined with the improving macro backdrop, gives me confidence that we’ve turned the corner.

CRE: From Survive to Thrive

For three years, the mantra was “survive until 2025.” Well, here we are. CRE investors who held on may finally be rewarded. Inflation is down, rates are easing, capital is flowing back, and new opportunities are emerging.

The end of the commercial real estate recession doesn’t mean easy money or a straight-line rebound. Unlike stocks, which move like a speedboat, real estate moves more like a supertanker – it takes time to turn. Patience remains essential. Still, the tide has shifted, and this is the moment to reposition portfolios, acquire at attractive valuations, and prepare for the next upcycle.

The key is to stay selective, keep a long-term mindset, and align every investment with your goals. For me, commercial real estate remains a smaller, but still meaningful, part of a diversified net worth.

If you’ve been waiting on the sidelines, it might be time to wade back in. Because in investing, the best opportunities rarely appear when the waters are calm—they show up when the cycle is quietly turning.

Readers, do you think the CRE market has finally turned the corner? Why or why not? And where do you see the most compelling opportunities in commercial real estate at this stage of the cycle?

Invest In CRE In A Diversified Way

If you’re looking to gain exposure to commercial real estate, take a look at Fundrise. Founded in 2012, Fundrise now manages over $3 billion for 380,000+ investors. Their focus is on residential-oriented commercial real estate in lower-cost markets – assets that tend to be more resilient than office or retail. Throughout the downturn, Fundrise continued deploying capital to capture opportunities at lower valuations. Now, as the CRE cycle turns, they’re well-positioned to benefit from the rebound.

The minimum investment is just $10, making it easy to dollar-cost average over time. I’ve personally invested six figures into Fundrise’s CRE offerings, and I appreciate that their long-term approach aligns with my own. Fundrise has also been a long-time sponsor of Financial Samurai, which speaks to our shared investment philosophy.

Source: The End Of The Commercial Real Estate Recession Is Finally Here